Table of Contents

The effect of object reflectivity on the ToF camera

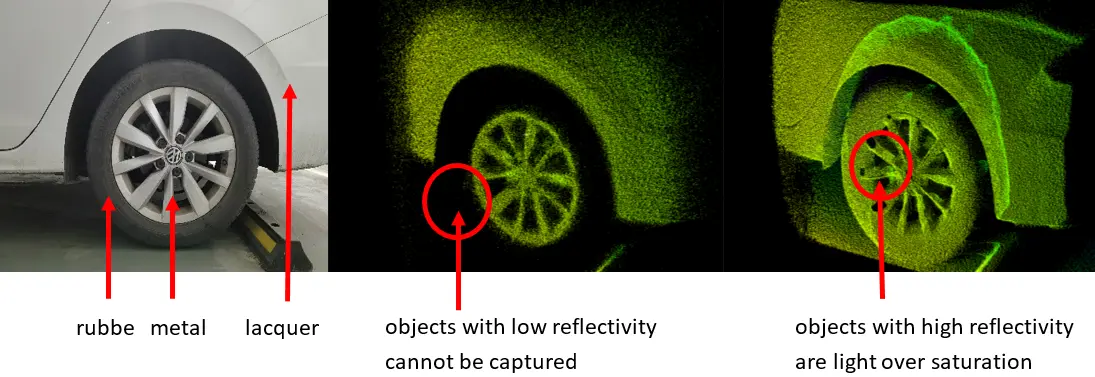

Objects of different colors and materials have different reflectivity to light, the indirect time of flight camera detects the distance by emitting modulated laser by itself. Due to the difference of object reflectivity, the energy reflected from the same light intensity is different. For objects with low-reflectivity, the reflected light signal is weak and may be below the effective threshold of the sensor, so it cannot be imaged. For objects with high-reflectivity, the intensity of the reflected light exceeds the effective threshold of the image sensor, and light over saturation occurs. As shown in the diagram below:

What is light over saturation of sensor?

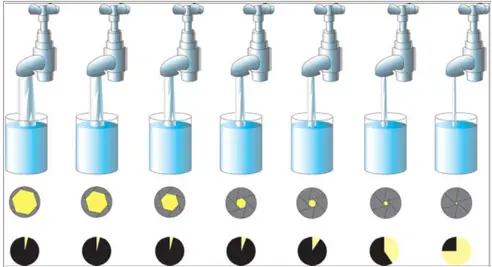

Light over saturation of sensor depends on aperture and exposure time. Aperture refers to the size of the valve open, controlling the light flux per unit time. Exposure time refers to the time the valve is open, controlling the total exposure time. It can be likened to a tap receiving water, as shown in the diagram below:

For an individual pixel, light over saturation is what happens when the light intensity is strong enough that it can no longer store any more charge. At light over saturation, the pixel loses its ability to hold additional charges. This extra charge spreads to neighboring pixels, causing them to saturate or error. For sensor imaging, light over saturation appears as black spots in depth images and white spots in IR images.

In order to ensure the imaging effect, low-reflectivity objects need to increase the exposure time and increase the light intensity signal, which brings about the problem of light over saturation of high-reflectivity objects. If the object with high-reflectivity needs to be imaged well, the exposure time will be reduced, which will bring about the problem that the object with low-reflectivity cannot be imaged because of insufficient signal strength. In order to solve the imaging problem of the coexistence of objects with high-reflectivity and low-reflectivity in the target region, we use High Dynamic Range (HDR) imaging technology.

What is HDR technology?

HDR technology uses multi-frame fusion, two images with different exposure modes are fused together to get a better image. HDR technology can better capture undetectable or light over saturation areas in exposure and achieve a larger dynamic range of exposure.

Principle of HDR

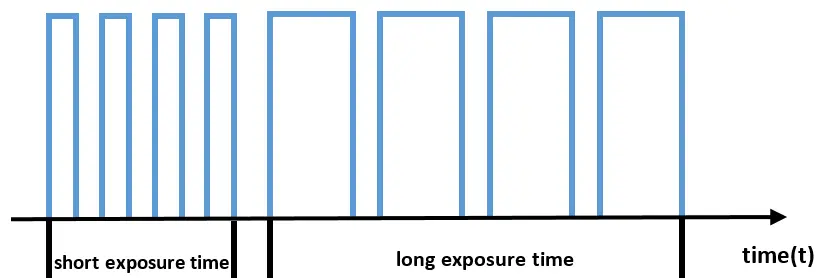

The image in HDR mode uses a long exposure time to ensure that the low-reflectivity area can be properly imaged, and the frequency of the short exposure time is then added to measure the light over saturated region at the long exposure time. The choice of short exposure time is determined by the length of the long exposure time, generally accounting for 1/8 to 1/10 of the long exposure time.

The time composition for HDR to generate a frame of image is shown in the following figure:

According to the actual application requirements, the corresponding frequency is selected by considering the measurement accuracy and precision, measurement distance, power consumption, frame rate and other factors.

HDR function demonstration

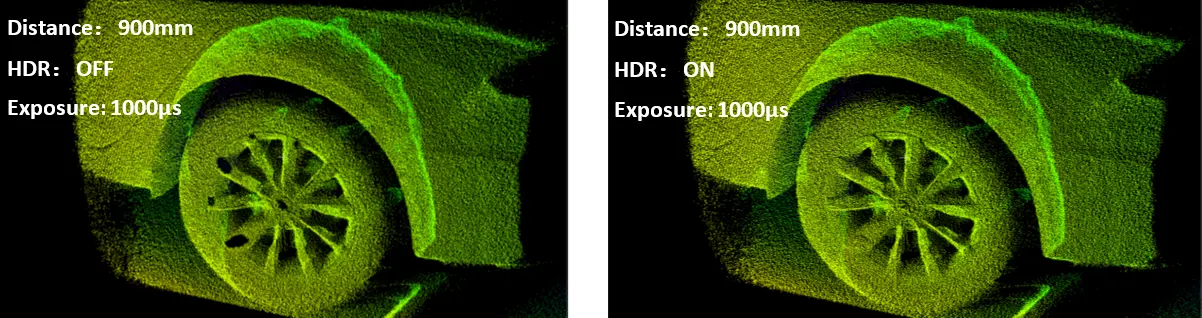

The camera was placed at 900mm from the measured object, with an exposure time of 1000μs, the frequencies for long exposure times are 120MHZ and 20MHZ, the frequencies for short exposure times is 60MHZ, imaging after HDR is turned on and off respectively is shown in the figure below:

After HDR is turn on, the both the low reflectivity black tire and the high reflectivity wheel hub can be seen in the depth map.

Problems with HDR

HDR mode cannot solve all the complex scenarios where high-reflectivity and low-reflectivity objects coexist.

1、Light over Saturation may still occur when measuring objects with high-reflectivity at near distances;

2、In the HDR filling area, the depth information jitter is too large in the short exposure time of different frequencies.